Publication

From Code to Play: Benchmarking Program Search for Games Using Large Language Models

We are happy to share that our PhD student Manuel Eberhardinger et al. got accepted with their joint work From Code to Play: Benchmarking Program Search for Games Using Large Language Models at the 2025 IEEE Transactions on Games

Abstract

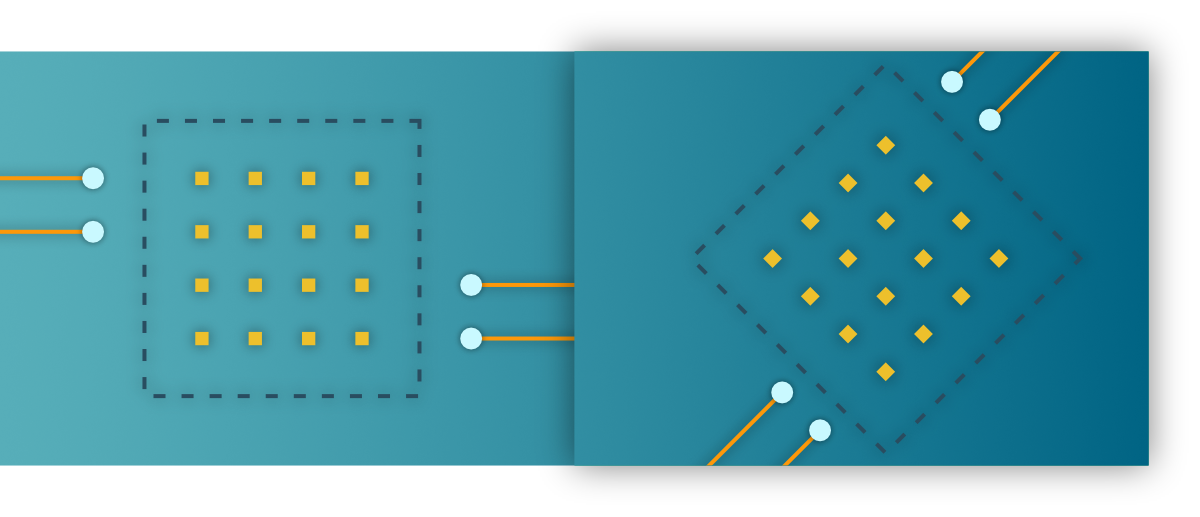

Large language models (LLMs) have shown impressive capabilities in generating program code, opening exciting opportunities for applying program synthesis to games. In this work, we explore the potential of LLMs to write usable code for a wide range of gaming applications, focusing on two programming languages, Python and Java. We use a hill-climbing algorithm, where the mutations and seeds of the initial programs are controlled by LLMs. For Python, the framework covers various game-related tasks, including five miniature versions of Atari games, ten levels of Baba is You, an environment inspired by Asteroids, and a maze generation task. For Java, the framework contains 12 games from the TAG tabletop games framework. Across 29 tasks, we evaluated 11 language models for generating Python and Java code. Our findings suggest that the performance of LLMs depends more on the task than on model size. In addition, the experiments show that running the hill-climbing algorithm multiple times with fewer iterations is better than a single run with more iterations.

Authors: Manuel Eberhardinger, James Goodman, Alexander Dockhorn, Diego Perez-Liebana, Raluca D. Gaina, Duygu Çakmak

Link to paper: Link